Table of Contents

Project Natick revolutionized our perception of data centers. It was among the most sophisticated aquatic technology research initiatives ever conducted—a simple and clever concept that a teenager could imagine but is most likely never pursued. Let’s find out how this research left thousands of scholars in wonder for its remarkable dependability, usefulness, and environmentally friendly energy consumption.

How Project Natick came to be

The initiative started as a white paper in 2013 by Sean James, who had previously been a U.S. Navy submariner. Given the inspiration of energy-saving, low-latency data centers, Microsoft researchers set out to propose putting servers underwater to harness the natural cooling and proximity to coastal populations.

With the slogan: “50% of us live near the coast. Why doesn’t our data?”

Project Natick’s name was a random codename for a Massachusetts town with no particular meaning 1.

Project Natick Phase 1

The initial phase, which was initiated in 2015, saw the deployment of a proof-of-concept named Leona Philpot off California’s Pacific coast. It ran from August to November 2015, effectively demonstrating the viability of underwater data centers. The phase solved major problems like cooling efficiency and biofouling, establishing that the idea was viable under actual circumstances 2.

Project Natick Phase 2

Targeting large-scale deployment, the second phase saw a larger, shipping container-sized data center installed at the European Marine Energy Centre (EMEC) off the Orkney Islands, UK, in June 2018. Measuring 12.2 meters long and 2.8 meters in diameter (3.18 meters with external components), it was powered solely by renewable energy.

The data center ran for two years before being recovered in July 2020 to analyze. It proved to be highly reliable, with a server failure rate only 1/8th that of land-based data centers. It also supported COVID-19 research through efforts such as Folding@Home and the World Community Grid. After the experiment, all materials were recycled, and the seabed was completely restored to its previous condition. 3

Findings

- 1/8th the failure rate of onshore facilities owing to a sealed, nitrogen-rich environment (without human intervention, constant temps).

- Withstood adverse ocean conditions for 2 years, demonstrating durability.

- Sustainability: Recorded a PUE (Power Usage Effectiveness) of 1.07, which is close to the theoretical optimum (1.0).

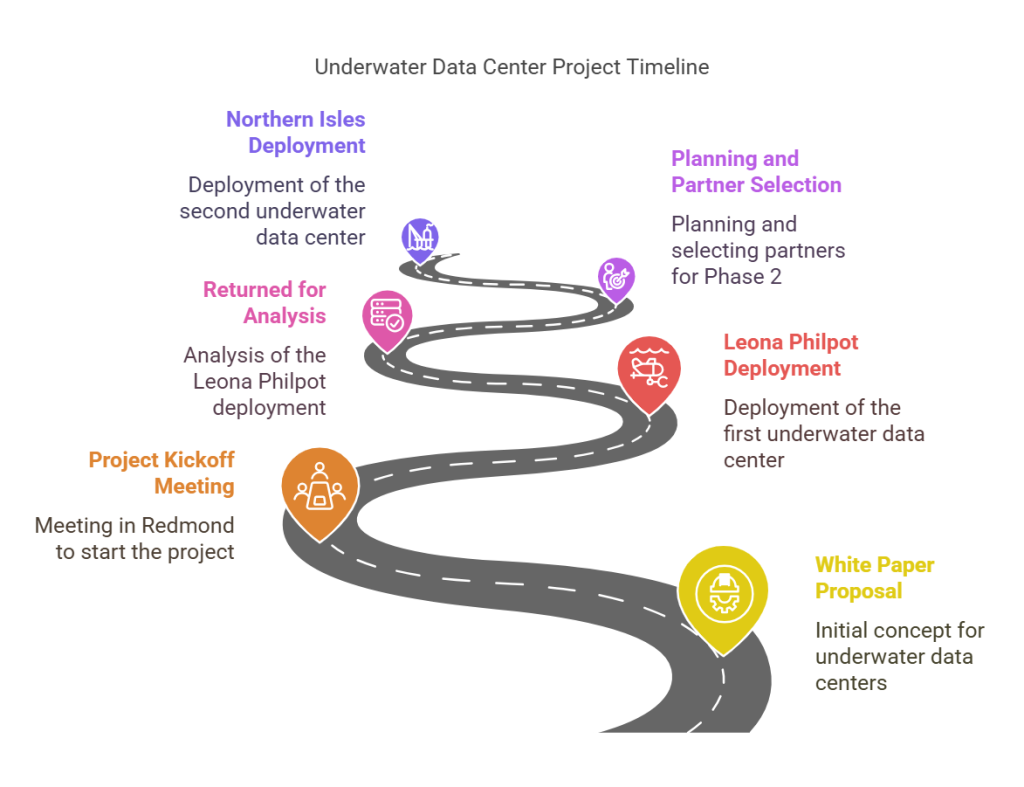

Project Natick Timeline

- 2013 – White paper suggesting underwater data centers.

- Late 2014 – Project kickoff meeting in Redmond, Washington.

- August 2015 – Leona Philpot prototype deployed off the Pacific coast.

- December 2015 – Prototype retrieved for analysis.

- September 2015 – November 2016 – Planning and partner selection for Phase 2.

- June 2018 – Northern Isles data center deployed at the European Marine Energy Centre (EMEC), Orkney Islands, UK.

- July 2020 – Data center retrieved, final analysis completed

Research Findings and Technical Insights

Project Natick gave useful insights into the efficiency and feasibility of underwater data centers.

Reliability

- During Phase 2, 855 servers were submerged for 25 months and eight days with only six failures.

- Land-based servers, on the other hand, had eight failures out of 135, which was an eightfold improvement in reliability.

- This was due to the nitrogen-filled environment and absence of human interference, which minimized corrosion and vibration effects.

Sustainability

- Achieved zero water use, relying on ocean cooling instead of traditional water-based cooling systems.

- Powered entirely by renewable energy, including wave, tidal, wind, and solar power from the Orkney grid mix.

- Designed for full recyclability, with the steel pressure vessel and heat exchangers recycled after retrieval.

Rapid Provisioning

- Deployment could be completed in under 90 days, offering a significant advantage in market responsiveness.

- Latency was minimized by locating data centers near coastal populations, where half of the global population resides within 120 miles of the ocean.

Challenges and Limitations

Size Limitations

- Vessel size is currently limited by construction methods.

- Microsoft’s 40-foot Phase 2 vessel contrasts with Subsea Cloud’s commercial pods at 20 feet.

- Large-scale deployment viability is in doubt.

Environmental Impact

- Impacts on marine ecosystems are not yet fully known.

- Regulatory issues have arisen, like NetworkOcean’s underwater data center project in San Francisco Bay, which was confronted with permitting hurdles because of environmental issues.

Security Risks

- Acoustic attacks present a new threat to underwater servers.

- Experiments indicate that sound at resonant frequencies (e.g., a high D note) might crash or destabilize servers, posing security risks.

Reasons for Discontinuation

In spite of its success in terms of technology, Project Natick was suspended in 2024, chiefly for practical and economic reasons. Deploying and keeping underwater data centers was found to be far more complicated compared to conventional facilities on land because, with only restricted access to underwater infrastructure, maintenance and improvements became challenging.

Economic feasibility was also a determining factor. Specialists contend that it might not be economically viable to scale underwater data centers, considering the expense of building, deploying, and meeting regulatory requirements. The project was not likely to be successful at a commercial level.

Regulatory challenges further made the effort more complicated. Permitting underwater infrastructure can take months or years, especially in dealing with environmental regulations. An Underwater Data Center in San Francisco Bay? Regulators Say Not So Fast is one such case that demonstrates how legal impediments can hamper or delay implementation.

Microsoft has since turned its attention to terra-based innovations, with a focus on AI-powered data centers. Microsoft is set to spend $80 billion on AI-powered infrastructure in the fiscal 2025, marking a strategic refocus towards more scalable and presently viable solutions. according to Microsoft expects to spend $80 billion on AI-enabled data centers in fiscal 2025.

Future Possibilities and Industry Trends

Although Project Natick was discontinued, the idea of underwater data centers has not lost its pertinence, with potential applications and developing industry interest. These centers may still find their use in niche applications, especially in areas where traditional data infrastructure is limited.

Underwater data centers might also enable low-latency connectivity to coastal areas and remote islands with more reliable and faster internet connectivity. According to some researchers, they might offer a boost in internet infrastructure to underdeveloped regions.

Interintegration with marine renewable energy is another option. Situated next to offshore wind farms or tidal energy, underwater data centers would lower the cost of infrastructure and enhance sustainability. Nevertheless, available marine renewable energy capacity is currently a limiting factor as at least 100 MW or more would be necessary for widespread deployment.

Materials and construction technology could also influence underwater data centers’ future. Ideas surrounding long steel frames suspending several cylinders of servers have been floated, which could allow for deeper and more stable installations. Others believe that subsequent versions might be installed at depths of more than 117 feet, permitting improved cooling efficiency and security.

The private sector remains interested in underwater computing. NetworkOcean is considering similar installations but is under regulatory pressure, such as the recent case with San Francisco Bay permitting disputes. China’s Highlander, however, installed a 1,433-ton commercial underwater data center near Hainan Island in 2023, indicating international interest in the technology.

The growing need for sustainable and AI-optimized data centers might bring with it a resurgence of underwater solutions. With the sector transitioning towards liquid cooling, nuclear-powered data centers, and highly efficient computing, underwater infrastructure might again be a practical solution. Experts monitoring trends in data centers for 2025 expect that sustainability forces and AI fueled computing requirements might push investment into underwater solutions again.

Still, there are issues to work through. There is the matter of environmental assessments taking into consideration long-term influences on marine habitats, scalability issues to overcome, and security threats such as sonic attacks that will compromise servers need more investigation. Continued debate across industry and the academy indicates Project Natick is closed for business, yet the idea of underwater data centers has not gone out of minds.

FAQs

Why Didn’t They Install Sound Pads to Block Outside Noise?

Recent research, such as a 2024 study from the University of Florida (Underwater data centers are the future. But a speaker system could cripple them), identified that underwater data centers like Natick’s could be vulnerable to acoustic attacks. These attacks involve sound waves at specific resonant frequencies (e.g., a high D note) that could vibrate hard drives, causing them to crash. During Project Natick’s Phase 2 (2018-2020), the underwater data center off the Orkney Islands operated successfully for two years without reported acoustic disruptions, suggesting this vulnerability wasn’t a primary concern during testing. However, the issue emerged as a theoretical risk post-retrieval, highlighting a potential flaw in long-term underwater deployments.

- Adding soundproofing materials like foam pads or acoustic panels would require space, potentially reducing the number of servers (855 in Phase 2) or complicating the compact, watertight design.

- Weight and buoyancy were critical for deployment at 117 feet deep. Extra materials could alter the vessel’s balance, making it harder to sink or retrieve

Why Not Install Data Centers in an Artificial Lake to Protect Marine Life?

Project Natick’s environmental impact on marine ecosystems was a noted concern, though not a primary reason for its discontinuation. The Phase 2 deployment off the Orkney Islands restored the seabed post-retrieval, and Microsoft claimed minimal ecological disruption due to the vessel’s small footprint and lack of emissions (Microsoft finds underwater datacenters sustainable). However, broader speculation—e.g., from regulatory debates around NetworkOcean’s San Francisco Bay proposal—highlighted potential risks like noise pollution, heat discharge, or interference with marine habitats (An Underwater Data Center in San Francisco Bay?). Long-term impacts remain understudied, fueling environmental caution.

- Oceans provide vast, consistent cooling—Natick’s vessel leveraged 48°F (9°C) water at depth for passive heat exchange. Artificial lakes, especially inland, might not match this. Surface water temperatures vary widely (e.g., 32°F to 85°F seasonally), and smaller volumes could heat up faster from server output, reducing efficiency (Underwater Datacenters).

- Oceans allow rapid deployment near coastal populations (half the world lives within 120 miles of the sea), reducing latency. Artificial lakes would need construction—land acquisition, excavation, and water management—negating Natick’s 90-day provisioning advantage and raising costs (Underwater Data Centers: Servers Beneath the Surface).Space is another limiter: oceans are vast, while artificial lakes are finite and might not scale for multiple data centers.

Key Citations

- Project Natick Phase 2 official page

- Microsoft swears its naming of Project Natick was random

- Microsoft confirms Project Natick underwater data center is no more

- Microsoft discontinues ‘Project Natick’, the ambitious underwater data centre experiment

- Microsoft scrapped its ‘Project Natick’ underwater data center trial — here’s why it was never going to work

- What Is Microsoft’s Project Natick? detailed explanation

- Microsoft Research Project Natick overview

- Microsoft ends Project Natick, the experiment of placing big metal tubes under the sea

- Explore underwater data centers’ viability, sustainability analysis

- Underwater data centers are the future. But a speaker system could cripple them, security concerns

- Are Underwater Data Centers Truly Practical? industry perspective

- Microsoft finds underwater datacenters are reliable, practical and use energy sustainably, detailed findings

- Underwater Datacenters detailed investigation

- Underwater Data Centers: Servers Beneath the Surface, future speculations

- An Underwater Data Center in San Francisco Bay? Regulators Say Not So Fast, regulatory challenges

- 8 Trends That Will Shape the Data Center Industry In 2025, industry trends

- Underwater Data Centers Reddit discussion, community insights

- Project Natick Press Kit historical details

- Under the sea: Microsoft tests a datacenter that’s quick to deploy, could provide internet connectivity for years, phase 2 details

- Additional Project Natick story, phase 2 insights

- Folding at Home COVID-19 research support

- World Community Grid COVID-19 research support

Leave a Reply