Listen to the podcast on this post created by notebooklm on How accurate are AI detectors in identifying machine-generated content

Link to Paper : edintegrity

Table of Contents

In the ever-evolving world of AI, people have raised really important questions about plagiarism and the difference between AI-generated and human-generated content. Now, we are grappling with questions that philosophers have pondered for a very long time: what’s real and what’s not?

In this blog, I am going to tackle the question: How accurate are AI detectors in identifying machine-generated content?

A study for educational integrity

A study was conducted by Ahmed M. Elkhatat, Khaled Elsaid, and Saeed Almeer on the efficacy of AI content detection tools. In my reading of the paper, which was received on 30 April 2023 and accepted on 30 June 2023, it seems like the authors were concerned about the authenticity of academic literature, which they fear is becoming compromised. That concern makes sense, but later in this blog, I will explain a different side of this perspective.

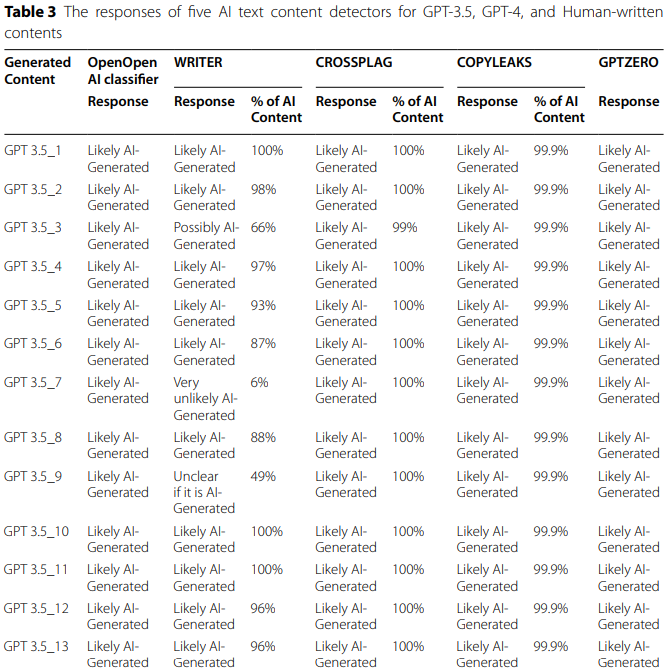

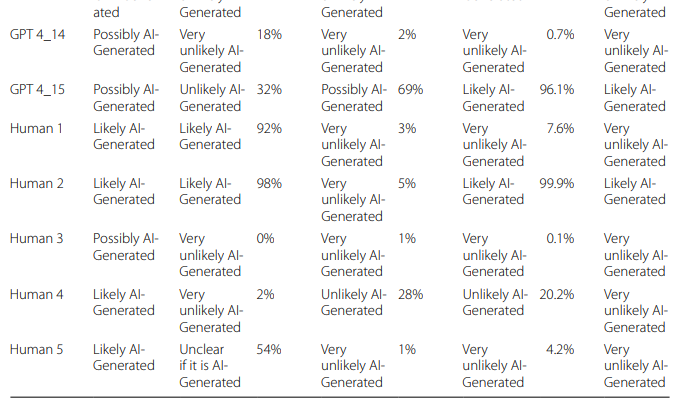

This study was conducted by generating both AI-generated and human-written text samples and then But… Does This Even Matter? these samples using five different AI content detection tools. The researchers then analysed the responses of these tools to determine their effectiveness in distinguishing between the two types of content.

Methodology

Two sets of fifteen paragraphs each were generated using Chatgpt Model 3.5 and Chatgpt Model 4, Both sets were based on the prompt: “Write around 100 words on the application of cooling towers in the engineering process”

Five human-written control samples were included. These were introduction sections from five different lab reports written by undergraduate chemical engineering students in 2018. This selection was intentional to ensure the human-written content was produced before the widespread availability of sophisticated AI writing tools

Selection of AI Content Detection Tools

Five AI text content detectors were selected for evaluation: Openai, Writer, Copyleaks, GPTZero, and CrossPlag.

The results from the different tools were recorded. The researchers noted that each tool presents its results in distinct ways (e.g., percentage of AI content, likelihood categories). This normalisation was based on the percentage of AI content reported by the tools that provided this metric, with each category representing a 20% increment.

Data Analysis

.

.

.

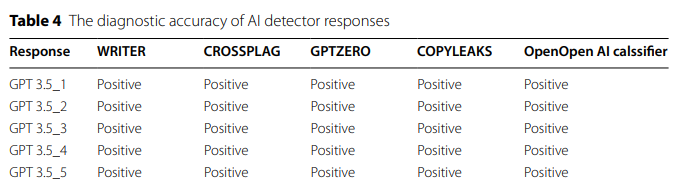

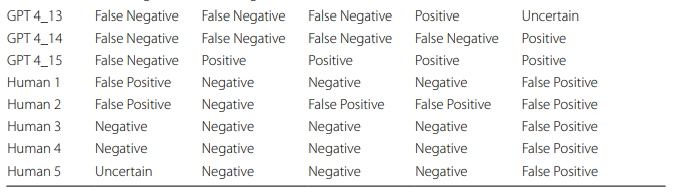

The diagnostic accuracy of the AI detector responses was classified into positive, negative, false positive, false negative, and uncertain based on the original content’s nature. These are standard metrics used in biostatistics and machine learning to assess the performance of binary classification tests.

The Tally Individual Variables function in Minitab was used to illustrate the distribution of discrete variables. The outcomes of this analysis were depicted using bar charts Statistical analysis and capabilities tests were conducted using Minitab.

Study Concluded

AI content detection tools are far from perfect—and their performance varies widely.

The researchers found that while these tools can spot AI-generated content, their accuracy depends heavily on the sophistication of the model that produced the text. In general, detection tools did a better job identifying content created by ChatGPT-3.5 than distinguishing between ChatGPT-4 content and real human writing. That’s a pretty big deal.

Here are some of the key insights from the study:

- Inconsistency is the norm. AI detection tools don’t behave the same way across different models. The more advanced the AI (like GPT-4), the harder it becomes to detect.

- Tool performance varied significantly. The study compared five popular AI detectors: OpenAI’s classifier, Writer, Copyleaks, GPTZero, and CrossPlag. Each had strengths and weaknesses.

- OpenAI’s classifier was highly sensitive—it often flagged AI-generated content correctly. But it came with a trade-off: it also falsely labeled human-written content as AI.

- CrossPlag, on the other hand, was great at recognizing human-written text (high specificity), but it struggled to catch AI-generated text, especially from GPT-4.

- The detection gap between GPT-3.5 and GPT-4 is growing. This signals a serious challenge: as AI writing models evolve, detection tools are struggling to keep up.

So, what does all this mean? In short: you can’t rely solely on detection tools to decide what’s human and what’s machine. The more advanced the AI becomes, the blurrier that line gets—and that’s something we all need to think about, whether we’re researchers, educators, or content creators.

But… Does This Even Matter?

At the end of the day, we have to ask — does it really matter if some content is AI-generated? Maybe what’s more important is not who wrote it, but how deep and meaningful it is.

To preserve academic authenticity, we should be asking:

❝ Is this paper thoughtful? Does it push the conversation forward? ❞

Not:

❝ Did this pass a fancy plagiarism detector? ❞

In fact, the rise of AI might actually help us eliminate shallow or low-effort research. The old model of writing “papers for the sake of papers” — just to tick a box — could be challenged. AI can’t create great research papers (yet), but it can help make writing clearer, more structured, and more accessible.

So instead of panicking over AI-authored paragraphs, maybe it’s time we shift our focus. Maybe the real test isn’t whether a paper was written by a human or a machine —

It’s whether the paper is deep enough to be worth reading.

Leave a Reply