Table of Contents

Introduction

Recent economic shifts reveal a striking paradox: corporate giants raise half a trillion dollars to dominate artificial intelligence (AI), only to be outpaced by nimble innovators spending as little as $5 million. This disparity underscores a critical truth—our grasp of AI’s potential remains uncertain, clouded by hype and misdirection. In this blog, I cut through the noise to examine the current state of AI development, its implications for global leadership, and the strategic choices ahead for diplomats, intellectuals, and world leaders.

Is AI Even a Threat?

When discussing the potential dangers of AI, one name that frequently comes up is Elon Musk. In many ways, he has become the Newton of our era. His concerns about AI stem from a relentless focus on advancing civilization while anticipating its greatest threats. To grasp the truth we must ask: what is the ultimate “end game” of any transformative technology?

Consider personal computing as a precedent. Sceptics once dismissed it, claiming, “Not everyone needs a computer.” Yet today, universal access is a reality. Now, apply this to space travel I think eventually everyone will have personal spaceships that will enable humans for interplanetary travel.

So, what is AI’s end game?

I believe that AI will eventually surpass human capabilities—not just in simple automation but in groundbreaking discoveries. Imagine an AI making Nobel Prize-winning breakthroughs every second, without the need to eat, sleep, or deal with concentration lapses.

Now, if humans dominate species like chickens and rats due to our intelligence, why wouldn’t AI—once it surpasses us—assume the same role over humanity?

The X Approach

This is the perspective of a cynic—someone who believes that if AI could truly see the truth, it would wipe us all out. But why? If it’s smarter than us, wouldn’t it also recognize that mass destruction leads nowhere?

We’ve moved on from imperialism and genocide. Why do we assume the truth AI will discover is one of mass murder? That’s simply not the reality. We’ve long since realized that exploiting people doesn’t lead to more productivity, and maybe AI will understand what we already know—the best way to live is through love, intellectual debate over ideological differences, and forgiveness when we inevitably hurt each other.

We’ve also learned that athletics and physics push us to become better, to test our limits. That’s what makes us human—pushing our bodies, pushing our minds, learning, and yes, making mistakes. But AI isn’t human. It might not make mistakes like we do, but that doesn’t mean it will be evil.

Elon Musk’s approach is to create a truth-seeking AI with infinite curiosity. The logic? If AI seeks truth, it will understand that killing is bad. If it seeks curiosity, it will realize that humans existing is far more interesting than humans not existing. And in the long run, this curiosity could lead AI to advance physics and biology in ways we can’t even imagine.

AGI and Super Intelligence

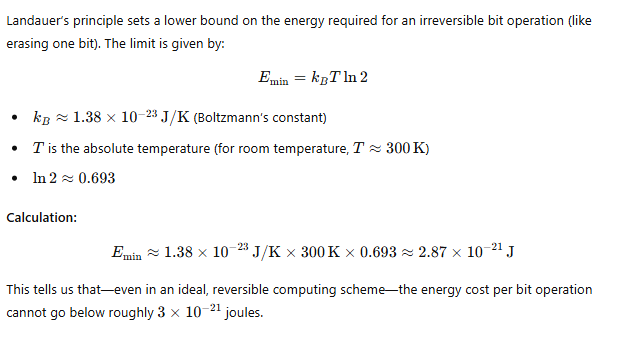

Energy per Bit Operation

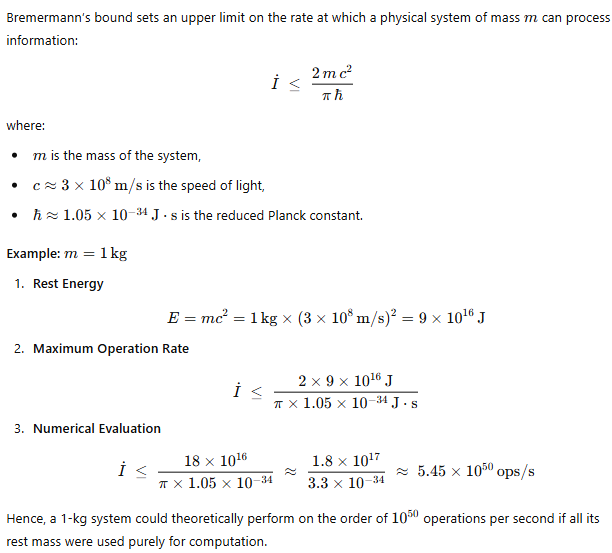

Computational Speed Limits

From a physics perspective, the fundamental limits set by energy requirements (via Landauer’s principle) and the maximum computational rates (via Bremermann’s bound and the Margolus-Levitin theorem) indicate that the universe permits far more computation than what is needed to emulate human-level intelligence. In other words, there is no known physical law that would make AGI impossible; rather, the challenge lies in engineering and understanding complex cognitive processes.

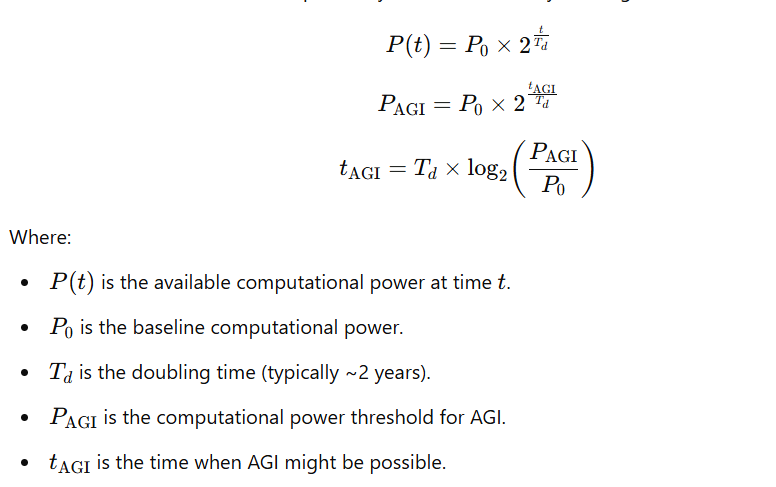

In these highly heuristic calculations, I rely on assumptions that remain very much open to debate. we get around 20 years from a baseline year, which, depending on the starting point, could be in the 2030s or 2040s.

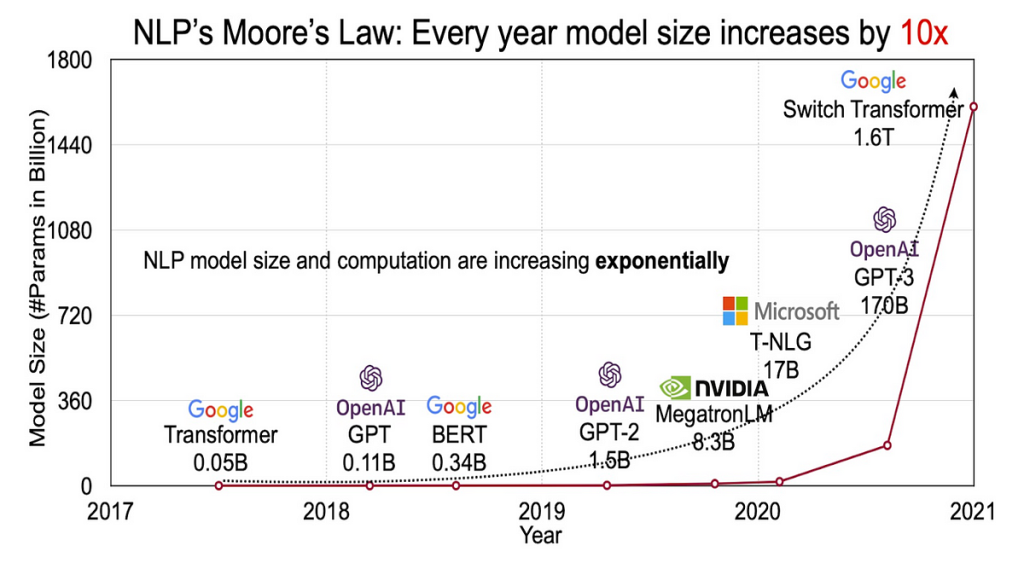

Current Situation

Right now, we’re using a transformer model architecture—one that’s absolutely terrible at reasoning, planning, and executive-level functions. This shouldn’t come as a shock to anyone with an IQ above 60.

We’re seeing some progress with reasoning models, which essentially call themselves in a fixed loop to handle “gotcha” moments and push past analysis roadblocks. But because of sub-word level tokenization, this approach is just a band-aid—not a real fix.

The core issue is sub-word tokenization, an inherent limitation in how current AI models process language. Eventually, we’ll move past this with byte-latent transformers or more advanced word vectorization. But for now, we’re stuck with AI that can mimic intelligence without actually thinking.

Will we have AGI? YES.

Will it come from LLMs or transformers? GOD NO.

~ Amarnath Pandey

A Vision of a Meritocratic Society

I want to give you a vision—a society capable of producing ten Elon Musks per country. A world where raw intelligence, creativity, and innovation aren’t wasted but cultivated at scale. But before we can get there, we need to address a fundamental flaw in our current system: the Matthew Effect.

Matthew Effect

“To those who have, more will be given; to those who have little, even that will be taken away.”

This isn’t just unfair—it’s the cold, hard truth. And because of it, we waste an enormous amount of genetic hyper-intelligence.

Think about it—the undiscovered genius in Nigeria who never even touched a violin, the mathematical prodigy in North Korea whose potential will never be realized. Even Elon Musk himself—if his school bullying had been just a little worse—might not have made it. It was brutal enough that he could have died.

How many future innovators, scientists, and visionaries are we losing every day simply because they were never given a chance? If we ever hope to become a multi-planetary civilization, we must counteract the Matthew Effect. this is something DEI imagined to counteract, but instead, it created a woke mob that tries to silence the truth rather than elevate those with potential.

The path to improvement isn’t through an ideology of entitlement but rather a masculine ideology that both men and women should and must follow, Instead of handing everything to everyone, we must expose people to everything that makes them strong. Truth survives because it is authentic. Ignorance, on the other hand, demands lies and deceit to manifest itself in reality. AI has tremendous power to fix this. Imagine a tailor-made tutor—a top 1% teacher who knows everything about almost everything. The best part? This tutor could be available not only to the richest kid but also to a child in extreme poverty in India.

If implemented correctly, AI has the potential to empower people across the world—giving every child, regardless of background, a fair shot at greatness.

Suggestion for World Leaders

Right now, the most advanced AIs are being used for propaganda and censorship. But instead of wasting government resources on controlling narratives, why not use them to empower and liberate students through education?

I firmly believe that if we educate people properly—teaching them philosophy, ethics, and critical thinking—we can build a truly meritocratic society where talent and intelligence rise naturally, rather than being suppressed by systemic inequalities.

And to world leaders, I say this:

“If war is all you want, I give you planets to fight over instead of pieces of land.”

~ Amarnath Pandey

Because in the end, this is the only way to ensure a truly diverse, inclusive, and advanced society.

Leave a Reply